Thanks to the DALL·E 2, we finally have a very nice graphic representation of the feelings of a Docker container inside a macOS environment, I will try with this article to make this poor container safe to the coast.

TL;DR Link to heading

At the time of writing, the only viable option to have a decent performance and a good DX are:

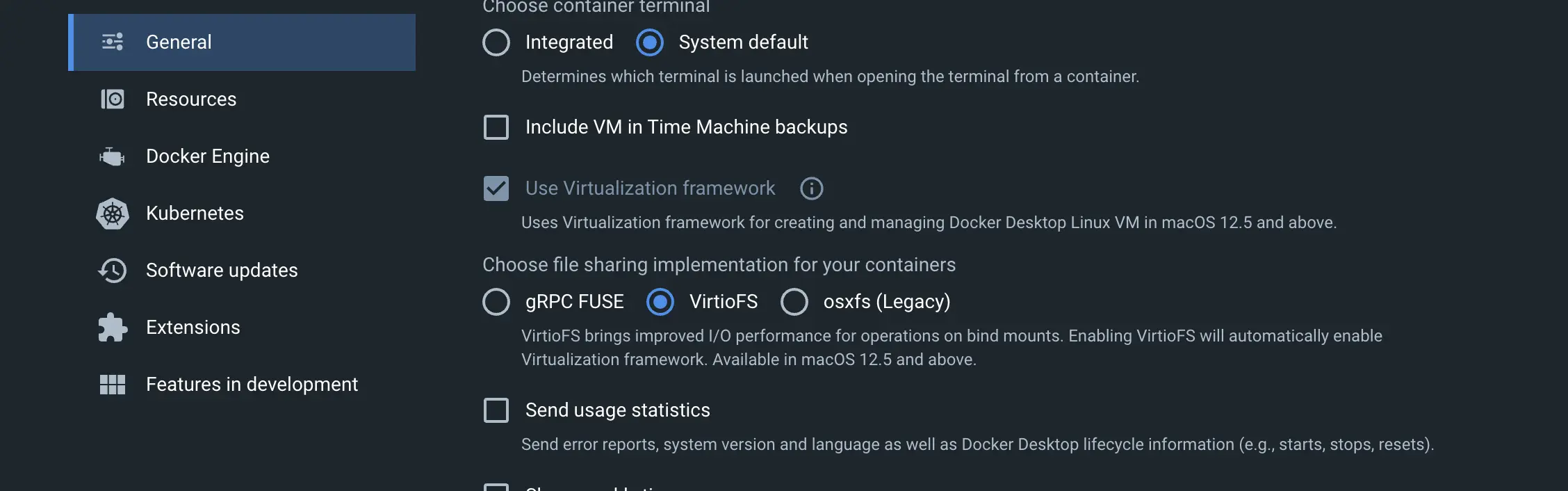

- VirtioFS to share the filesystem (Docker Desktop, Rancher Desktop, Colima) - There are still some issues.

- Use named volumes and if you use VSCode you can rely on things like DevContainers to have a good DX - 🚀 BONUS: PoC project with Backstage and DevContainers.

- Use DDEV + Mutagen for PHP projects (JS coming soon).

- If you are VI/Emacs user, all you need is your editor and tools in a container, or if you want a minimal Linux GUI env, take some inspiration here.

Update 15/02/2023 Link to heading

At the time of writing is still a beta option but it looks promising, from Docker Desktop 4.16.0 (https://docs.docker.com/desktop/release-notes/#4160) it is possible to use Rosetta2 instead of QEMU to run x86 containers. You can enable it very easily from the experimental feature settings tab, it will increase dramaticaly the emulation performance.

How does Docker work on macOS? Link to heading

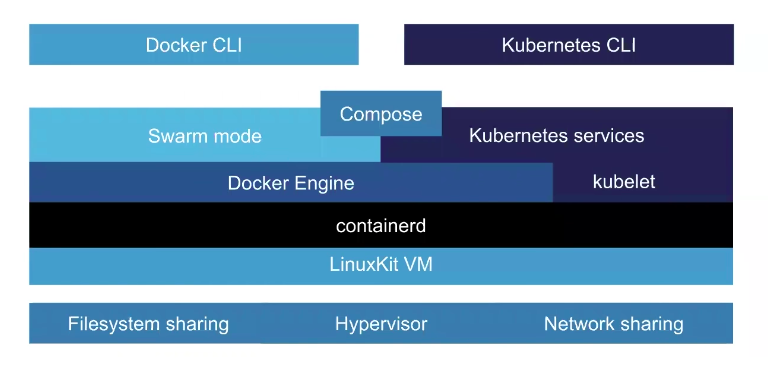

Docker architecture on macOS - Source https://collabnix.com/how-docker-for-mac-works-under-the-hood

Docker engine, on macOS and Windows, needs a Linux Kernel; there aren’t any exceptions here, you do not see it, but it is there to do all the dirty jobs (HN: https://news.ycombinator.com/item?id=11352594)

Instead, Docker CLI and docker-compose are native binaries for all operating systems.

Two things are worth mentioning here regarding Microsoft; the first one is that Windows (and this sometimes can lead to some confusion) natively support Docker to run Windows containers.

This implementation has been possible thanks to the joint effort of Microsoft and Docker in 2016 to create a container engine implementing the Docker specification on Windows; kudos to you, MS.

The second one is that Microsoft tried in the past to natively support Linux processes by real-time converting syscalls to run unmodified Linux processes on the Windows kernel (WSL1).

You can find here a very detailed deep dive into this brilliant technology; even though Microsoft still supports it, it has significant limitations in terms of performance and compatibility. This is why Microsoft released a new engine, WSL2, which is based on a more traditional approach of a lightweight virtual machine running an unmodified Linux kernel plus some kernel modules to better integrate on Windows.

Anyway, let’s go back to the topic.

Docker for Mac is the official Docker inc. product made to run most seamlessly Docker containers on macOS; they even support Kubernetes.

Some notable features of Docker for Mac:

- Docker for Mac runs in a LinuxKit VM and recently switched to the Virtualization Framework instead of HyperKit.

- Filesystem sharing is implemented on a proprietary technology called OSXFS. Since it is slow for most of the use cases where tons and tons of files are involved (yes, I am looking at you, Node, and PHP), a new player is slowly ramping up from the labs; I am talking about VirtioFS - which seems very promising.

- Networking is based on VPNKit.

- It can run Kubernetes.

- It is a closed-source product, and if conditions matches require a paid subscription (at the time of writing: “Companies with more than 250 employees OR more than $10 million in annual revenue”)

There are two valid OSS alternatives:

They both use Lima, filesystem-sharing issues are tracked here, and VirtioFS has just landed. It is interesting to see that the consensus around VirtioFS is confirmed, and once again, open source sets the rules.

So, to sum up:

- Docker containers are still Linux processes and need a Virtual Machine to run on other operating systems.

- Docker-cli and docker-compose are native binaries.

- As Docker runs in a virtual machine, something to share the host filesystem with the VM is required; we can choose between OSXFS (proprietary and deprecated), gRCP FUSE, or VirtioFS. They all come with issues, and VirtioFS is the most promising one.

- To run Docker containers on Mac, you can choose between Docker for Mac (closed-source), Rancher Desktop (OSS), and Colima (OSS).

What is a Docker volume? Link to heading

Now that we know how the underlying things work, we can quickly dive into another confusion-leading topic: Docker Volumes.

Docker containers are ephemeral, and this means that files exist inside the containers as long as the container exists, let’s see a an example:

1➜ ~ # Run a container and keep it running for 300 seconds.

2

3➜ ~ docker run -d --name ephemeral busybox sh -c "sleep 300"

4d0b02322a9eef184ab00d6eee34cbd22466e7f7c1de209390eaaacaa32a48537

5

6➜ ~ # Inspect a container to find the host PID.

7➜ ~ docker inspect --format '{{ .State.Pid }}' d0b02322a9eef184ab00d6eee3

84cbd22466e7f7c1de209390eaaacaa32a48537

9515584

10

11➜ ~ # Find the host path of the container filesystem (in this case is a btrfs volume)

12➜ ~ sudo cat /proc/515584/mountinfo | grep subvolumes

13906 611 0:23 /@/var/lib/docker/btrfs/subvolumes/b237a173b0ba81eb0a60d35b59b0cc5ed[truncated]

14

15➜ ~ # Read the container filesystem from the host.

16➜ ~ sudo ls -ltr /var/lib/docker/btrfs/subvolumes/b237a173b0ba81eb0a60d35

17b59b0cc5ed7247423bed4b4dcbb5af57a1c3318eb

18total 4

19lrwxrwxrwx 1 root root 3 Nov 17 21:00 lib64 -> lib

20drwxr-xr-x 1 root root 270 Nov 17 21:00 lib

21drwxr-xr-x 1 root root 4726 Nov 17 21:00 bin

22drwxrwxrwt 1 root root 0 Dec 5 22:00 tmp

23drwx------ 1 root root 0 Dec 5 22:00 root

24drwxr-xr-x 1 root root 16 Dec 5 22:00 var

25drwxr-xr-x 1 root root 8 Dec 5 22:00 usr

26drwxr-xr-x 1 nobody nobody 0 Dec 5 22:00 home

27drwxr-xr-x 1 root root 0 Dec 10 18:37 proc

28drwxr-xr-x 1 root root 0 Dec 10 18:37 sys

29drwxr-xr-x 1 root root 148 Dec 10 18:37 etc

30drwxr-xr-x 1 root root 26 Dec 10 18:37 dev

31

32➜ ~ # Now write something from the container and see if it's reflected on the host filesystem.

33➜ ~ docker ps -l

34CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

35d0b02322a9ee busybox "sh -c 'sleep 300'" 4 minutes ago Up 4 minutes ephemeral

36➜ ~ docker exec -it d0b02322a9ee touch hello-from-container

37➜ ~ sudo ls -ltr /var/lib/docker/btrfs/subvolumes/b237a173b0ba81eb0a60d35b59b0cc5ed7247

38423bed4b4dcbb5af57a1c3318eb | grep hello

39-rw-r--r-- 1 root root 0 Dec 10 18:42 hello-from-container

40

41➜ ~ # Now write something from the host to see reflected on the container.

42➜ ~ sudo touch /var/lib/docker/btrfs/subvolumes/b237a173b0ba81eb0a60d35b59b0cc5ed72474

4323bed4b4dcbb5af57a1c3318eb/hello-from-the-host

44➜ ~ docker exec -it d0b02322a9ee sh -c "ls | grep hello-from-the-host"

45hello-from-the-host

46

47➜ ~ # Stop the container.

48➜ ~ docker stop d0b02322a9ee

49➜ ~ sudo ls -ltr /var/lib/docker/btrfs/subvolumes/b237a173b0ba81e

50b0a60d35b59b0cc5ed7247423bed4b4dcbb5af57a1c3318eb

51total 4

52lrwxrwxrwx 1 root root 3 Nov 17 21:00 lib64 -> lib

53drwxr-xr-x 1 root root 270 Nov 17 21:00 lib

54drwxr-xr-x 1 root root 4726 Nov 17 21:00 bin

55drwxrwxrwt 1 root root 0 Dec 5 22:00 tmp

56drwx------ 1 root root 0 Dec 5 22:00 root

57drwxr-xr-x 1 root root 16 Dec 5 22:00 var

58drwxr-xr-x 1 root root 8 Dec 5 22:00 usr

59drwxr-xr-x 1 nobody nobody 0 Dec 5 22:00 home

60drwxr-xr-x 1 root root 0 Dec 10 18:44 sys

61drwxr-xr-x 1 root root 0 Dec 10 18:44 proc

62drwxr-xr-x 1 root root 148 Dec 10 18:44 etc

63drwxr-xr-x 1 root root 26 Dec 10 18:44 dev

64-rw-r--r-- 1 root root 0 Dec 10 18:45 hello-from-the-host

65

66➜ ~ # Filesystem still exists until it is just stopped, but now let's remove it.

67➜ ~ docker rm ephemeral

68ephemeral

69➜ ~ sudo ls -ltr /var/lib/docker/btrfs/subvolumes/b237a173b0ba81eb0a

7060d35b59b0cc5ed7247423bed4b4dcbb5af57a1c3318eb

71ls: cannot access '/var/lib/docker/btrfs/subvolumes/b237a173b0ba81eb0

72a60d35b59b0cc5ed7247423bed4b4dcbb5af57a1c3318eb': No such file or directory

So, what happened here:

- While running and stopping state, the container has an allocated filesystem on the host.

- Removing a container removes the associated filesystem too.

If you want to deep-dive more into this subject, I cannot recommend more those excellent readings:

- Deep Dive into Docker Internals - Union Filesystem

- Where are my container’s files? Inspecting container filesystems

Now that we have a clearer understanding of how Docker manages the filesystem, it is easily understandable how risky it can be to use a container without proper data persistency layer. This is exactly where docker volumes come in handy.

Docker volumes and bind mounts

Bind mounts Link to heading

As the documentation states: “Bind mounts have been around since the early days of Docker. Bind mounts have limited functionality compared to volumes. A file or directory on the host machine is mounted into a container when you use a bind mount. The file or directory is referenced by its absolute path on the host machine. By contrast, when you use a volume, a new directory is created within Docker’s storage directory on the host machine, and Docker manages that directory’s contents.”

This is all you need to bind mount a host directory inside a container:

➜ docker run -v <host-path>:<container-path> <container>

Let’s see a quick real-world example of how bind mounts work:

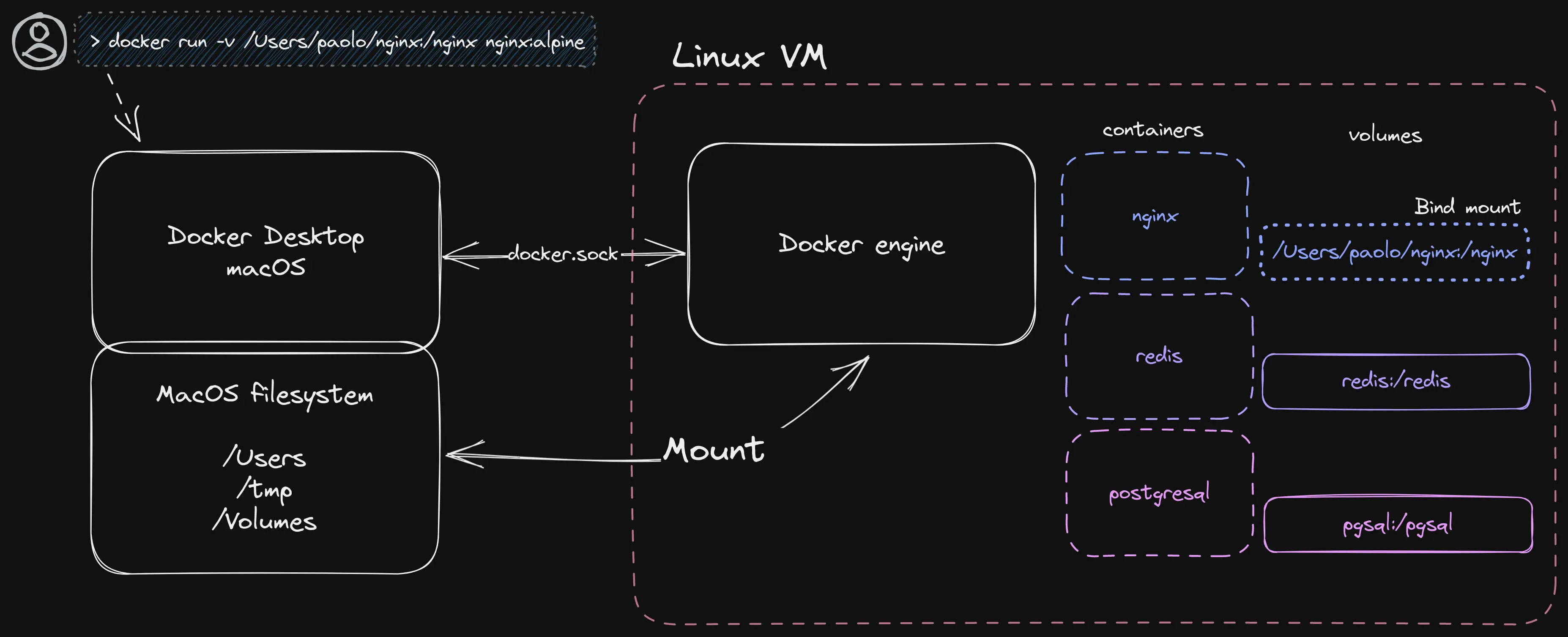

And yes, this is precisely where all the problem begins.

A bind mount is a way to mount the host filesystem inside a container, and you can imagine if a VM is in the middle of us and the container, everything gets more complicated.

From 10 thousand feet, it works like this:

Docker bind mount diagram

So, when you mount a path from your Mac, you are just asking the Linux VM to mount the path of the network-shared filesystem of your Mac.

Usually, you do not need to configure this tedious part manually; the tools automatically do it for you.

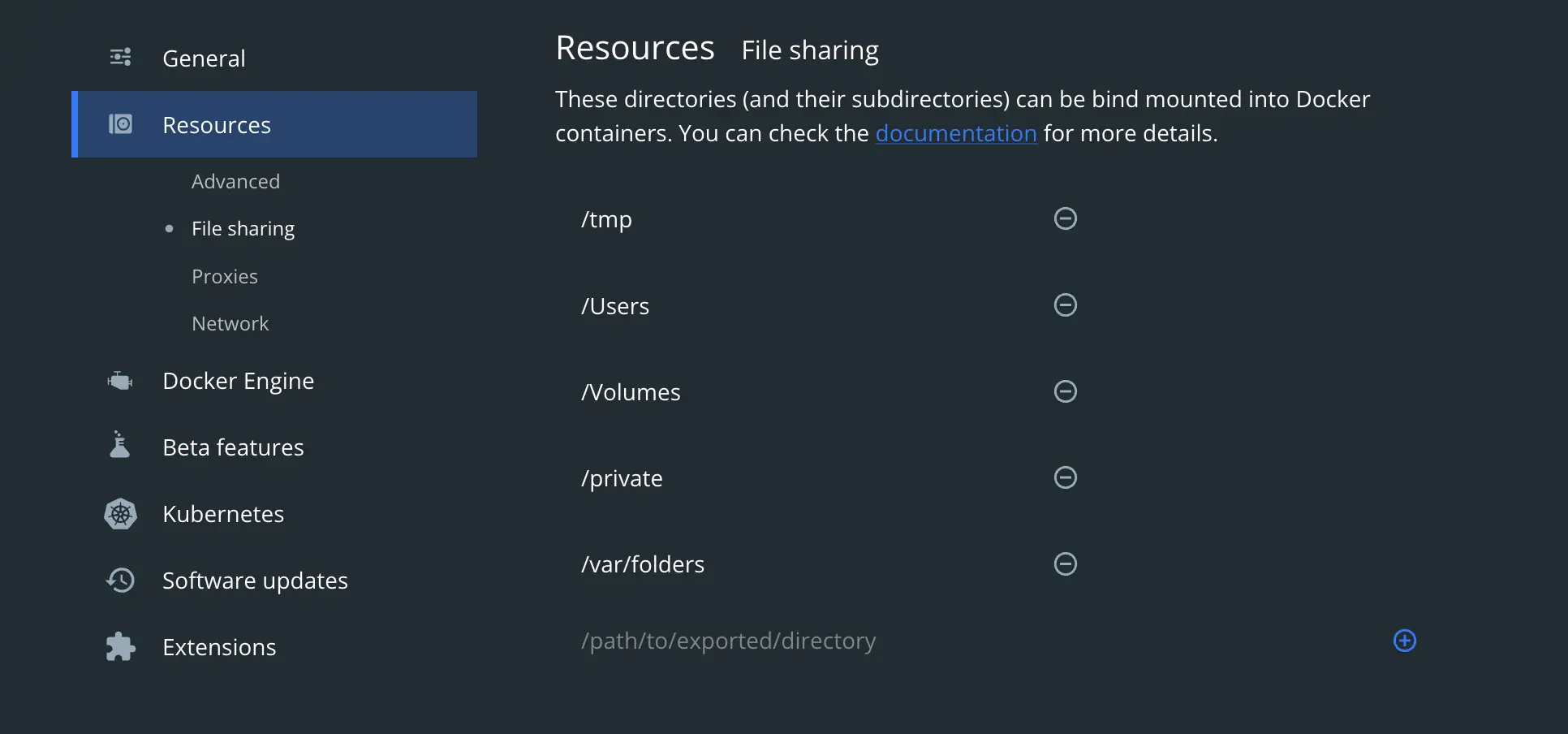

You can find it what is mounted on Docker Desktop under:

Preferences -> Resources -> File sharing

Docker Desktop bind mount settings

As you can see from the image, we are mounting local paths like /Users as mount path on the Linux VM, and thanks to this, we can mount Mac directories as they were local:

1➜ ~ docker run --rm -it -v /Users/paolomainardi:$(pwd) alpine ash

2/ # ls -ltr

3total 60

4drwxr-xr-x 12 root root 4096 May 23 2022 var

5drwxr-xr-x 7 root root 4096 May 23 2022 usr

6drwxrwxrwt 2 root root 4096 May 23 2022 tmp

7drwxr-xr-x 2 root root 4096 May 23 2022 srv

8drwxr-xr-x 2 root root 4096 May 23 2022 run

9drwxr-xr-x 2 root root 4096 May 23 2022 opt

10drwxr-xr-x 2 root root 4096 May 23 2022 mnt

11drwxr-xr-x 5 root root 4096 May 23 2022 media

12drwxr-xr-x 7 root root 4096 May 23 2022 lib

13drwxr-xr-x 2 root root 4096 May 23 2022 home

14drwxr-xr-x 2 root root 4096 May 23 2022 sbin

15drwxr-xr-x 2 root root 4096 May 23 2022 bin

16dr-xr-xr-x 13 root root 0 Dec 11 14:33 sys

17dr-xr-xr-x 260 root root 0 Dec 11 14:33 proc

18drwxr-xr-x 1 root root 4096 Dec 11 14:33 etc

19drwxr-xr-x 5 root root 360 Dec 11 14:33 dev

20drwxr-xr-x 3 root root 4096 Dec 11 14:33 Users

21drwx------ 1 root root 4096 Dec 11 14:33 root

22/ # ls -ltr /Users/paolomainardi/

23total 4

24drwxr-xr-x 4 root root 128 Dec 18 2021 Public

25drwx------ 4 root root 128 Dec 18 2021 Music

26drwx------ 5 root root 160 Dec 18 2021 Pictures

27drwx------ 4 root root 128 Dec 18 2021 Movies

28drwxr-xr-x 4 root root 128 Dec 18 2021 bin

29drwxr-xr-x 4 root root 128 Dec 31 2021 go

30-rw-r--r-- 1 root root 98 Apr 1 2022 README.md

31drwxr-xr-x 5 root root 160 Apr 3 2022 webapps

32drwx------ 6 root root 192 Nov 12 16:50 Applications

33drwxr-xr-x 3 root root 96 Nov 28 21:23 Sites

34drwxr-xr-x 14 root root 448 Nov 28 22:22 temp

35drwx------ 105 root root 3360 Dec 5 09:16 Library

36drwx------ 164 root root 5248 Dec 8 01:16 Downloads

37drwx------ 5 root root 160 Dec 8 01:27 Desktop

38drwx------ 26 root root 832 Dec 8 01:37 Documents

Volumes Link to heading

As the documentation says:

Volumes are the preferred mechanism for persisting data generated by and used by Docker containers. While bind mounts depend on the host machine’s directory structure and OS, volumes are completely managed by Docker. Volumes have several advantages over bind mounts:

Volumes have several advantages:

- Volumes are easier to back up or migrate than bind mounts.

- You can manage volumes using Docker CLI commands or the Docker API.

- Volumes work on both Linux and Windows containers.

- Volumes can be more safely shared among multiple containers.

- Volume drivers let you store volumes on remote hosts or cloud providers, to encrypt the contents of volumes, or to add other functionality.

- New volumes can have their content pre-populated by a container.

- Volumes on Docker Desktop have much higher performance than bind mounts from Mac and Windows hosts.

Well, there’s nothing to add here; the advantages of volumes vs. bind mounts are apparent, especially the last point.

But the biggest drawback is the developer experience; they are not meant to satisfy this requirement from the beginning, and using them for coding is counterintuitive or not usable.

Let me demonstrate in practice how they work; I’ll show you in order:

- A javascript node API up and running on localhost

- Development lifecycle on the host

- Create a Docker Volume

- Create a container using the docker volume

- Developing workflow inside a container with a volume

(I am the king of typos, I’ve tried to record this asciinema without errors ten times, and this is the best I’ve finally achieved 🏆🏆🏆)

As you have noted, the biggest drawback here is that any changes I am doing outside the container must be copied inside the container with docker cp.

I could have mitigated this issue by installing VI inside the container, but I would have lost all my dotfiles, and usually, for Javascript, I prefer to use VSCode.

Volumes vs. bind-mounts Link to heading

Well, a long journey so far, and still not yet a solution 😎

At least we have learned two things:

- Bind mounts are the most natural way to move our files inside a container, but they suffer severe performance issues caused by file system sharing when the Docker engine runs in a VM.

- Volumes are way faster than bind mounts, but they lack the basics of the development experience (e.g., You and your local editor cannot see the files outside the container)

Benchmarks Link to heading

To demonstrate how much the performance is affected based on different scenarios (bind, volumes), I’ve created a simple test suite, nothing super fancy, just running some npm install tests on an empty create-react-app project.

Let’s see them in action:

Environments:

- Pane top: macOS - Docker Desktop - virtioFS enabled

- Pane bottom: Linux

Tests (npm install):

- Native node on the host

- Docker without bind/volumes

- Docker with a bind mount + a volume just for node_modules

- Docker with a bind mount

Syntethic results Link to heading

| Test | Operating system | Time (s) |

|---|---|---|

| Native | Linux | 5,73 |

| Docker | Linux | 5.64 |

| Docker - Bind Mount | Linux | 6.81 |

| Docker - Bind Mount + Volume | Linux | 6,18 |

| Native | macOS | 4.09 |

| Docker | macOS | 6.80 |

| Docker - Bind Mount + Volume | macOS | 7.65 |

| Docker - Bind Mount | macOS | 21.05 🏆 |

And finally, we can see the performance impact results; macOS is more or less x3.5 times slower (10x times slower when using gRPC Fuse) when using just the bind mount, and the culprit here is the node\_modules directory [328M and 37k files just for an empty React app].

Moving this directory (node_modules) in a Volume makes the timing on par with Linux: 7.65s.

I’ve just taken Node as an example. Still, other development ecosystems are affected by the same issue (yes, PHP, I am looking at you and your vendor👀), or more generic directories with hundreds of thousands of small files are devilish for performance.

Fixes Link to heading

Now that we have a general overview of the moving pieces, how they work, and can be combined, the next question is:

What is the recommended method for decent performance and a good DX with Docker on Mac?

This is an excellent question!

Use VirtioFS

The short answer for now is: Docker Desktop for Mac with VirtioFS, is a good compromise between performance and DX, even if it is slower than Linux; for most cases, it is a negligible difference.

When developing a new project in Node or PHP, you’re not going to reinstall your node_modules/vendor from scratch every time, it is rare, and in that rare cases, you’ll spend a bit more seconds.

Just keep in mind that:

- It is a closed-source product

- It requires a license depending on the usage

- Since it is a pretty recent technology, many issues and regressions remain.

So, what are the alternatives?

Bind mounts + Volume Link to heading

As the results below show, this is the best approach to have almost native performance, but it comes with significant DX issues.

Let’s see an example:

We cannot see from our host the files being written by the container, and this is a big issue that can impact how we write the software and how our IDE will be capable of understanding the codebase we are working on.

How we can fix it ? Well depends on how you are used to develop.

Terminal editor’s users Link to heading

The only thing you need here is a container with the editor and the tools you need; mount your dotfiles and your code, and that’s it; you’ll enjoy an almost native experience.

> docker run --rm -v $HOME/.vimrc:/home/node/.vimrc -v $PWD:/usr/src/app node:lts bash

GUI Editors / IDE users Link to heading

Here things become more complicated.

Desktop applications are bound to the operating system they are designed for, and they usually cannot understand much of the underlying abstractions, like a filesystem inside a Docker container.

As the problem is that we cannot read or write files from and to the docker volume, we need a tool capable of this two-way syncing.

One of the most prominent solutions is Mutagen.

Mutagen allows syncing the files in both directions from/to the host/container when they happen. You can find here how to install it and how to configure it.

Not very easy to grasp at the beginning since it is composed of a daemon, a CLI tool, and some configuration files. It natively supports Composer and has also been a part of the Docker Desktop experimental feature, then deprecated and then again by the Mutagen authors as a Docker Desktop extension.

The last worth mentions, it is also supported and integrated by the DDEV project.

DDEV is a tool to manage local development environments for PHP projects (it started with Drupal ❤️) and then extended to many more frameworks. This is the best plug-and-play and battery-included choice to manage your PHP project with Docker, no matter the operating system you run.

Well, what if we want to avoid installing other moving parts? Can we run our GUI Editor as a Docker container so we can access the files?

You can run them quite easily on Linux, but there isn’t a comparable straightforward solution to run Linux GUI applications on macOS; unless you install XQuartz (Xorg for macOS) and do some tricks.

Microsoft here did a very cool thing instead; they implemented a Wayland compositor for Windows capable of natively running Linux GUI applications: WSLg

Anyway, this is not a very practical way to use an editor, which will always be very limited and poorly integrated with the operating system.

The best solution would be to have an IDE capable of running on your local system while simultaneously being capable of using Docker Containers, having the best of both worlds.

Should we invent it? Luckily for us, no, we shouldn’t because someone already did it, and I am talking about Development Containers

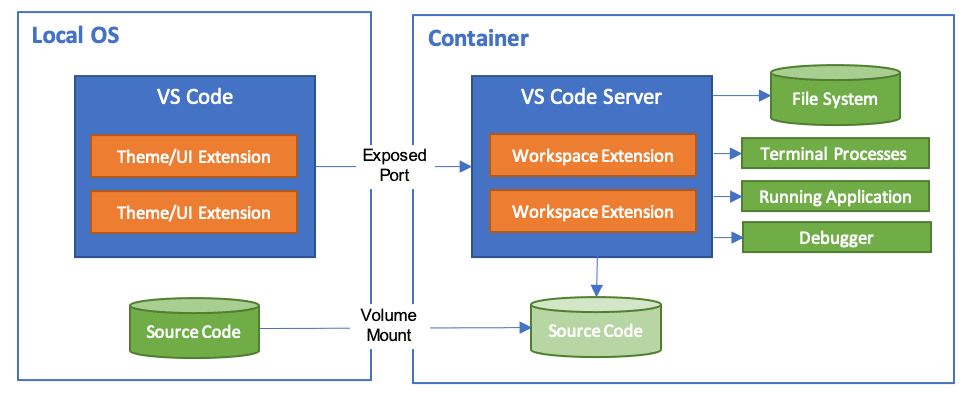

Development Containers Link to heading

An open specification for enriching containers with development specific content and settings.

A Development Container (or Dev Container for short) allows you to use a container as a full-featured development environment. It can be used to run an application, to separate tools, libraries, or runtimes needed for working with a codebase, and to aid in continuous integration and testing. Dev containers can be run locally or remotely, in a private or public cloud.

Not more to add here; the intent and the specs are very straightforward and clear about the goals.

Microsoft created this project, and the spec is released under the CC4 license.

Here you can find a list of “supporting tools” for the moment is almost “all Microsoft” for the editors part and cloud services; we have, of course, Visual Studio Code and GitHub Codespaces.

However, the project is slowly getting attraction, even tho there are several competitors and compelling standards in this area.

Cloud companies and developer-focused startups envision a world made of cloud-based development environments, which have pros and cons. I’ll debate this topic in another post.

The most significant competitors now are:

- Gitpod

- GCP Cloud Workstations

- AWS Cloud9

- Docker Development Environment

How it works Link to heading

You need to create a .devcontainer/devcontainer.json file inside the project’s root, and a supporting editor (AKA VsCode) will just run it.

Developing inside a container

You can find here a very good quick start. It covers almost all aspects, including using it with Docker Compose.

There is also a very nice guide on how to use it to improve the performance of macOS using Volumes instead of just bind mounts, which we have already discussed.

To make it easily understandable (at least for me), I’ve created a PoC project to connect all the wires; the project lives here: https://github.com/paolomainardi/docker-backstage-devcontainers and it’s an empty Backstage application to test the entire development workflow using the VSCode Dev Containers integration.

To conclude, the overall idea is excellent, and since it is an open standard, it could be easily adopted by other vendors, and there are yet some efforts ongoing:

To summarize the key points, i like much:

- Everything is Container-based.

- I can define custom VSCode extensions: I can define a shared development environment between team members.

- Thanks to the Development containers features., I can use Docker and Docker-compose inside the container.

- SSH works out of the box (you must have the ssh-agent up and running)/

- I can reuse the very same configuration locally or in the cloud.

Conclusions Link to heading

This post started with the intent to quickly explain how to improve the performance of Docker on macOS, but at the same time, I felt the need to leave nothing behind of the basic concepts that are fundamentals to understand and get out the max from your system.

The big question here is, why not just use Linux?

This isn’t a trivial question; I use Linux as my daily driver (I’m writing this post on Linux), and I consider it the best development environment you can use.

But the hardware ecosystem must be taken into account, especially since the Apple Silicon machines are in the market; they are so great in terms of performance, battery life, and service.

So why don’t you get the best of both worlds? Machines are powerful enough today to run them seamlessly. There are other creative ways to combine them, as Michal Hashimoto did, merging macOS and NixOS, lovely.

Use what you like and what you find better for your workflow and, why not, what makes you happy today.

This is also a gift for the future me; next time, I should explain to someone how Docker works on Linux and outside, and I’ll use this guide as a general reference.

Thanks for reading all of this, and if you find something wrong or want to discuss some topics further, get in touch with me.